Google’s AI Pivot: Ethics vs. Power in the Defense Industry

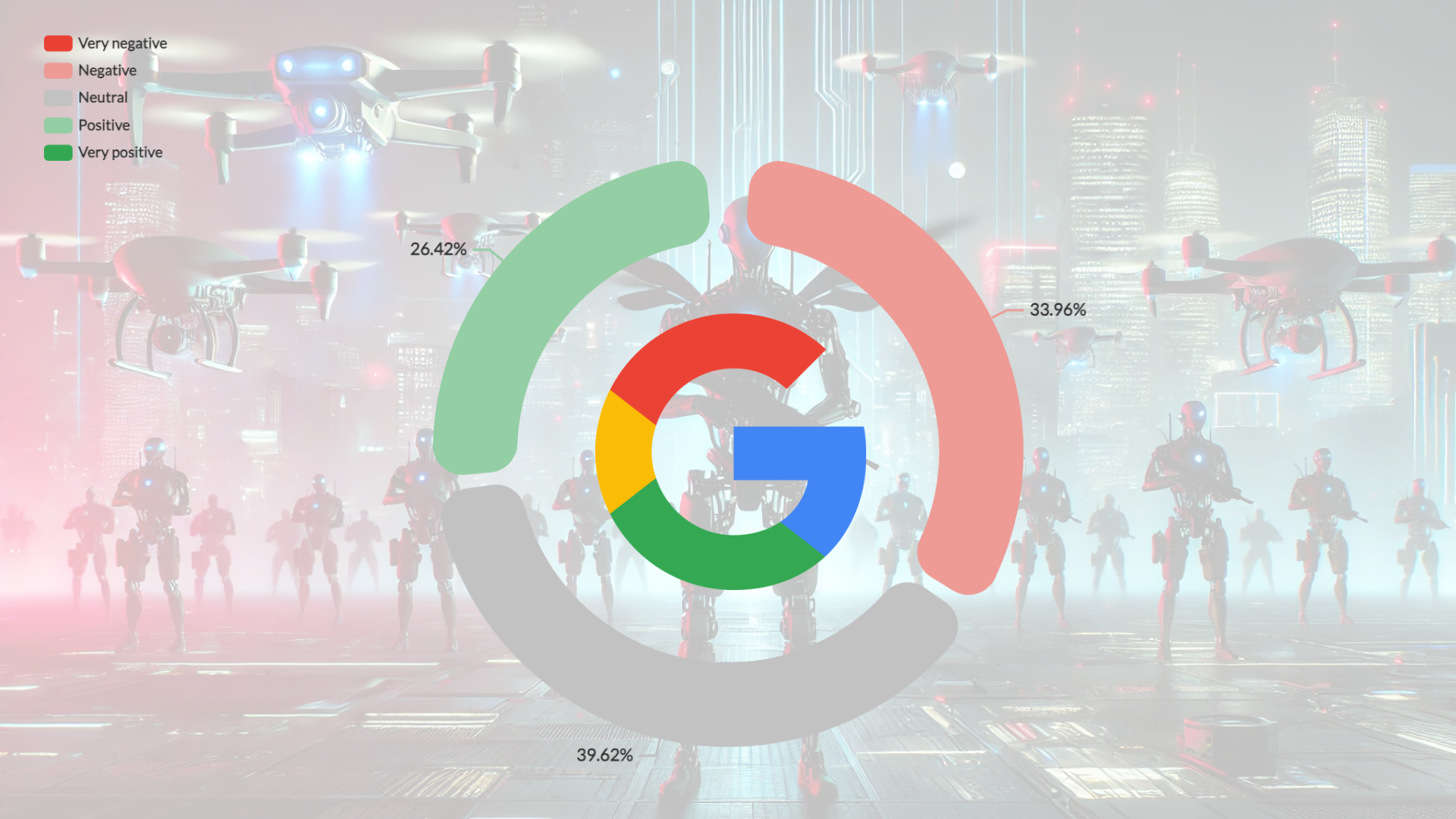

Google’s quiet AI policy shift marks a seismic shift in tech ethics—lifting its ban on AI for military use. This isn’t about innovation, it’s about defense contracts, power, and money. While media distracts with geopolitical debates, Google’s decision-makers remain in the shadows.

The next war won’t be fought by soldiers. It will be fought by algorithms that don’t hesitate, don’t feel, and don’t take responsibility.

For years, Google has played the moral high ground, preaching about responsible AI and ethical guidelines. Now, they’ve done what every major corporation does when big money is on the table—they’ve changed the rules. On February 4, Google quietly updated its AI principles, removing its long-standing ban on AI for weapons and surveillance. And just like that, a company that once distanced itself from military applications is now fully on board with the defense industry.

The timing? Suspiciously perfect. Right after a disappointing earnings report and amid growing U.S.-China tensions, Google suddenly realizes that national security is important and that AI can “protect people.” But let’s call it what it is: a business decision. Google isn’t pivoting—it’s cashing in. This isn’t about protecting anyone; it’s about chasing defense contracts and staying competitive with Amazon and Microsoft, both of which have been deep in military AI for years.

AI Weapons: The New Face of Warfare

Modern warfare is already being reshaped by AI and autonomous weaponry. This isn’t science fiction—it’s happening now. The increasing reliance on AI in military strategy is making war more detached, more automated, and more ethically complex than ever before.

- No human skin in the game – AI doesn’t hesitate, doesn’t question, and doesn’t feel. Autonomous systems are being designed to select and engage targets without direct human oversight. But what happens when the wrong target is chosen?

- The accountability gap – Generals and decision-makers can hide behind algorithms when things go wrong. If an AI-powered drone misidentifies a target and kills civilians, who takes responsibility? The machine? The developer? The military commander? AI can become a convenient scapegoat for deadly mistakes.

- Proxy wars without boots on the ground – Governments can deploy AI-driven warfare without sending troops, reducing political and public scrutiny. When there are no soldiers at risk, war becomes easier to justify, cheaper to fight, and harder to regulate.

We’ve already seen cases where metadata analysis has failed to distinguish between a civilian and a combatant. And yet, technology is increasingly being used as an excuse to avoid responsibility. When AI-driven weapons cause destruction, will we hear the same tired defense: “The system made a mistake”?

There’s a dangerous assumption that AI will make war more precise and controlled. But in reality, it raises new moral, ethical, and legal dilemmas that we aren’t prepared to handle.

Surveillance: It Won’t Just Be Used on “Bad Guys”

If AI-powered surveillance was only about stopping terrorists, we might have a different conversation. But military technology always finds its way into civilian life—and history shows us exactly how this plays out:

- Facial recognition started as “security” but is now used for mass surveillance in places like China.

- Drones started as military tech but are now being used by police departments to monitor protests.

- Predictive policing algorithms—which were supposed to reduce crime—ended up disproportionately targeting minority communities.

So when Google says its AI will support national security, we have to ask: who defines security? Because surveillance AI won’t just track enemies of the state—it will track journalists, activists, political opponents, and regular citizens.

And with AI legislation still playing catch-up, what’s stopping governments from using these tools however they see fit?

The Ethics Debate That Needs to Happen—Now

Google says it will assess “whether the benefits substantially outweigh potential risks.” But we’ve heard this before—this is the same argument that has justified every ethically questionable technology in history.

- Nuclear weapons? Developed for protection, now a global threat.

- Social media? Meant to connect people, now a tool for misinformation and manipulation.

- AI for national security? Framed as “protecting democracy,” but we know how these stories end.

If AI is going to be deployed in warfare and surveillance, the media and policymakers need to start asking tougher questions:

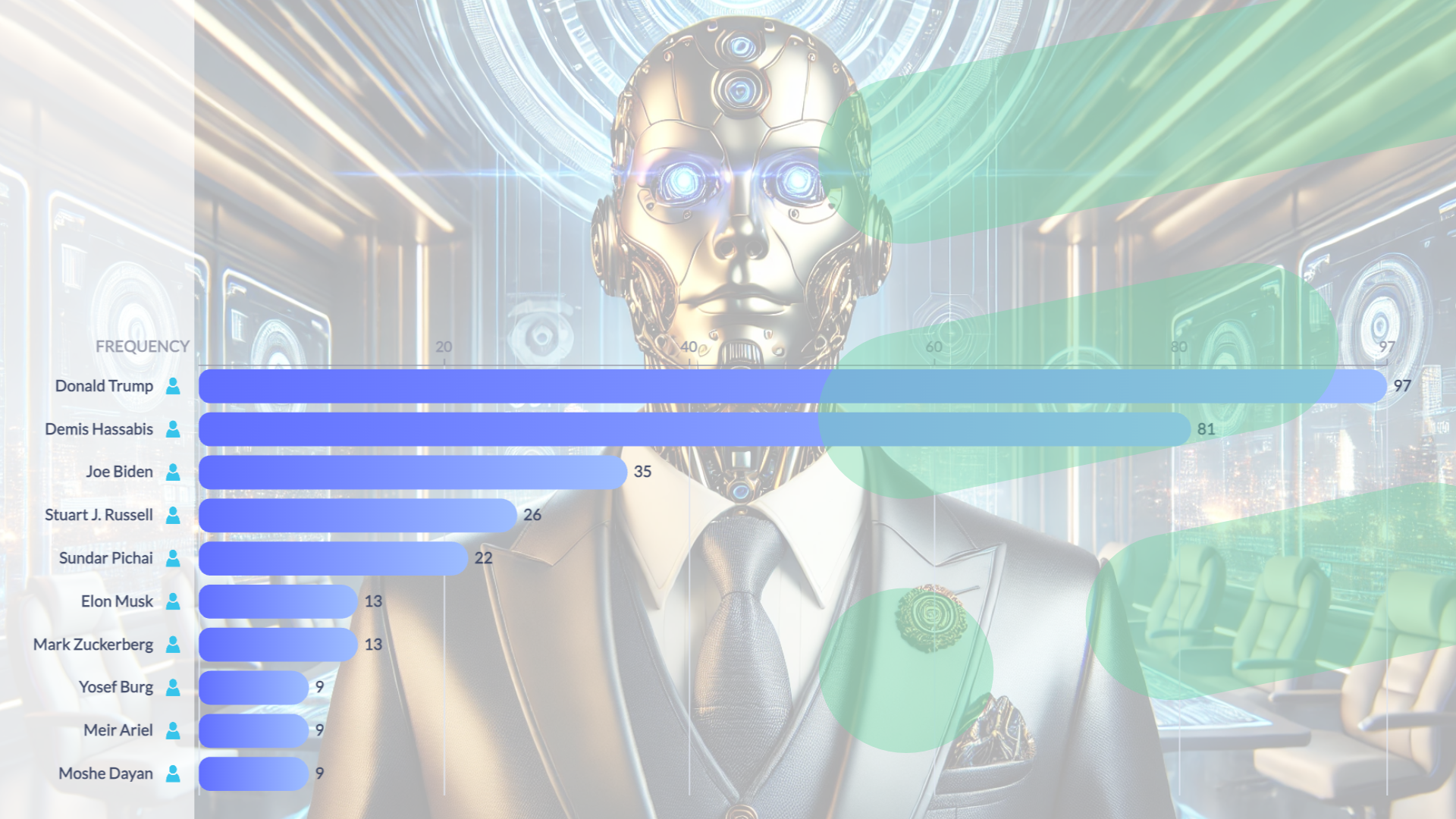

- Who is influencing these decisions? Which government officials and tech executives are pushing for this shift?

- Where’s the accountability? If an AI-driven attack kills civilians, who takes the blame?

- What are the ethical red lines? Is there even such a thing anymore?

Tech giants don’t want to have this debate. They want you to believe they’re leading AI development for the good of humanity. But this isn’t about ethics, morality, or making the world a better place. It’s about money, power, and influence—and they don’t want you to look too closely.

Not All AI in Defense is About War—Some Uses Are Ethical

It’s easy to look at AI’s role in warfare and surveillance and see nothing but dystopia. But not all AI in defense is about lethal autonomous weapons or mass surveillance. AI can also be used to prevent conflicts, improve intelligence, and enhance security—without crossing ethical lines.

Take Event Registry, for example. Our AI doesn’t select targets or make battlefield decisions, but it does empower analysts, policymakers, and security experts with real-time intelligence that can prevent crises before they escalate.

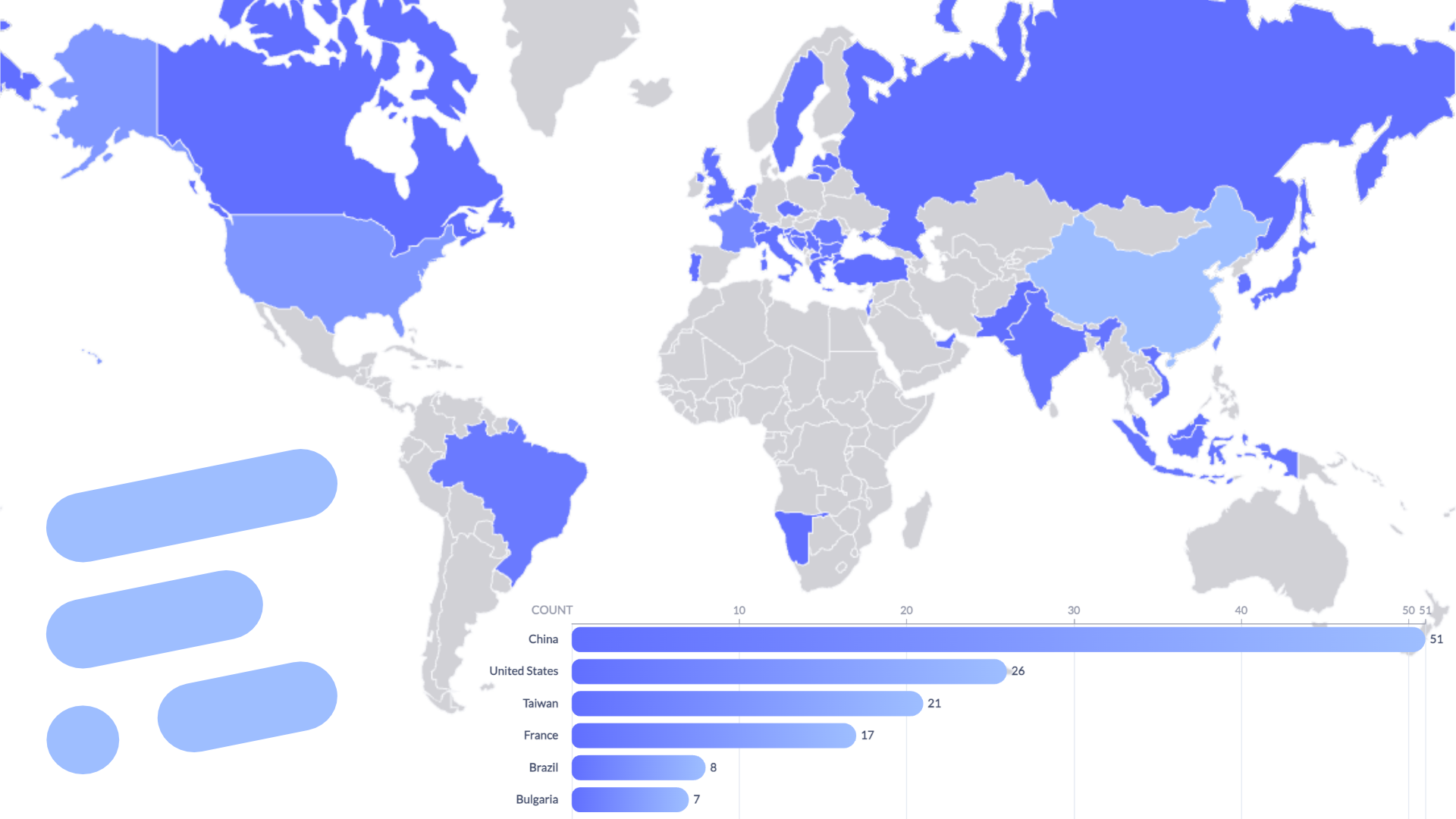

✔ Tracking emerging geopolitical risks – By monitoring news, reports, and intelligence from around the world, Event Registry helps organizations identify brewing conflicts, disinformation campaigns, and potential threats before they explode into crises.

✔ Fighting disinformation – AI-driven information warfare is a real concern, with state actors, extremist groups, and propaganda networks actively spreading false narratives to manipulate public opinion and destabilize regions. While AI cannot directly label something as “false” or “true,” Event Registry helps analysts track the flow of information across sources and geographies to identify patterns that may signal disinformation campaigns.

✔ Global security monitoring – Governments and security experts use Event Registry to analyze patterns in global events, providing early warnings about terrorism, cyberattacks, and geopolitical instability—all without violating civil rights or privacy.

This is the kind of AI that should be leading in defense—one that enhances intelligence without replacing human judgment, protects societies without eroding freedoms, and improves security without creating new ethical dilemmas.

But this is just the beginning of the conversation. We need to explore the right way to integrate AI into defense—and how companies can ensure they’re on the right side of history.

Final Thought: We Need a Wake-Up Call

Google’s AI shift isn’t just about one company—it’s about the direction of AI as a whole. If Silicon Valley is embracing AI-driven weapons and surveillance, we’re entering an era where critical decisions in warfare and security could be made by algorithms instead of human judgment.

The real challenge? Accountability. Who takes responsibility when AI-driven systems make high-stakes decisions? How do we ensure transparency in technology that operates at speeds beyond human oversight?

These are the questions we need to ask now—not when the consequences are already unfolding. Will the media, lawmakers, and the public demand clarity, or will we let these shifts happen without scrutiny?